Fun with high-performance computing

I am having fun with high performance computing (HPC) and Slurm.

Using a Python library (pcluster) on Amazon Web Services I have spun up an HPC with one head node and 32 worker nodes. (See image.) Each worker node has 32 cores and about 256 GB of RAM. That means I have 1024 (32 x 32) cores as my disposal.

I then created a list of jobs measuring 710 items long. Each job takes a set of journal articles, does natural language processing against them, and outputs a data set -- a "study carrel". Each job also does a bit of modeling against the carrel. There are about 10's of thousands of articles in these jobs.

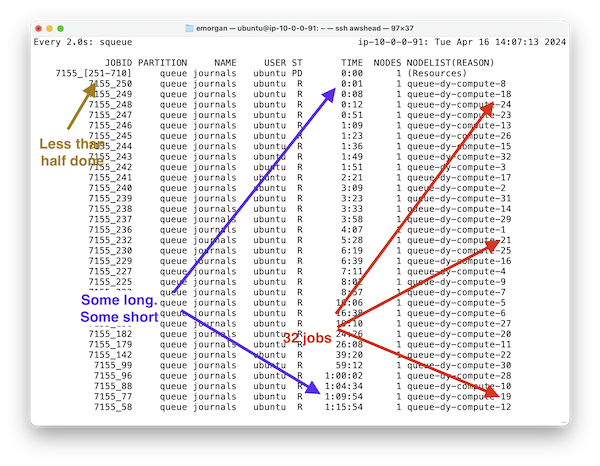

I submit the jobs to the cluster using the attached Slurm sbatch file, which is really a glorified shell script. Nodes are spun up, and the work continues until all the jobs are completed. I can watch the process of jobs by monitoring the queue as well as a few log files. From the second attachment you can see there are thirty-two nodes working. Some have been working for only a few minutes, while others have been working for more than an hour. Some of the jobs only have a few dozen articles to process; some of the jobs have hundreds of articles. Moreover, about 125 jobs have been completed.

Processing things in parallel is a very powerful computing technique, and when one has access to multiple computers or one very big computer, parallel processing can make long computations very short. For example, if I were not using parallel processing against my study carrels, then the processing would take weeks, but in this case, it only takes a few hours.

Fun with HPCs.

Creator: Eric Lease Morgan <[email protected]>

Source: This content was originally shared on a Slack channel

Date created: 2024-04-30

Date updated: 2024-04-30

Subject(s): High-Performance Computing; Amazon Web Services;

URL: https://distantreader.org/blog/fun-with-hpc/